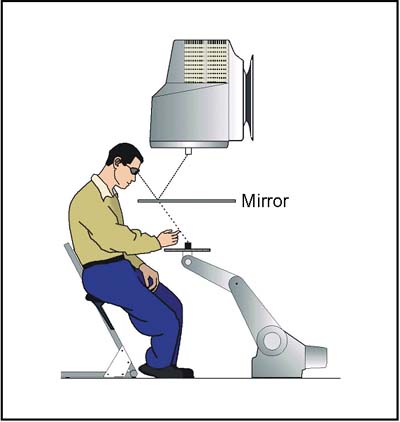

We are now using virtual reality displays, both in our own lab and in the Virtual Environment Technologies Centre (VETC) here in London, to study the perception of object dimensions and the visual control of grasping. The VR displays allow us to control the visual appearance of objects with enormous precision from trial to trial. The first virtual workbench was built in my own lab with the help of my former graduate student, Yaoping Hu. Yaoping completed her Ph.D. in Engineering and is now a faculty member in the Department of Electrical and Computer Engineering at the University of Calgary. Using the virtual workbench, Yaoping and I were able to show that, even though the judgments of object size are influenced by the presence of other objects in the visual array, the scaling of grasping movements directed at those same objects is not. This fits well with the work on grasping illusions and suggests that perception uses scene-based frames of reference and relative metrics while the visual control of grasping uses egocentric frames of reference and absolute metrics.

We are now using virtual reality displays, both in our own lab and in the Virtual Environment Technologies Centre (VETC) here in London, to study the perception of object dimensions and the visual control of grasping. The VR displays allow us to control the visual appearance of objects with enormous precision from trial to trial. The first virtual workbench was built in my own lab with the help of my former graduate student, Yaoping Hu. Yaoping completed her Ph.D. in Engineering and is now a faculty member in the Department of Electrical and Computer Engineering at the University of Calgary. Using the virtual workbench, Yaoping and I were able to show that, even though the judgments of object size are influenced by the presence of other objects in the visual array, the scaling of grasping movements directed at those same objects is not. This fits well with the work on grasping illusions and suggests that perception uses scene-based frames of reference and relative metrics while the visual control of grasping uses egocentric frames of reference and absolute metrics.

The reason that perception and action use different frames of reference is clear. If perception were to attempt to deliver the real metrics of all objects the visual array, the computational load would be astronomical. The solution that perception appears to have adopted is to use world-based coordinates -- in which the real metric of that world need not be computed. Only the relative position, orientation, size and motion of objects is of concern to perception. Such relative frames of reference are sometimes called allocentric. The use of relative or allocentric frames of reference means that we can, for example, watch the same scene unfold on television or on a movie screen without be confused by the enormous absolute change in the coordinate frame.

As soon as we direct a motor act towards an object, an entirely different set of constraints applies. We cannot rely on the perception system's allocentric representations to control our actions. We cannot, for example, direct actions toward what we see on television, however compelling and ‘real’ the depicted scene might be. To be accurate, the actions must be tuned to the metrics of the real world and the movements we make must be computed within an egocentric frame of reference that is specific to the effector that is being employed at the time.

Hu, Y., & Goodale, M.A. (2000). Grasping after a delay shifts size-scaling from absolute to relative metrics. Journal of Cognitive Neuroscience, 12, 856-868. Download pdf