Research

Campus Maps

Attention and Speech Processing

About Ingrid Johnsrude

Dr. Ingrid Johnsrude is a Western Research Chair in Cognitive Neuroscience at Western University, and holds a joint appointment in the Department of Psychology and School of Communication Sciences and Disorders. Her research interests include the neural basis of speech understanding, how people understand speech in challenging listening situations, diagnosing and treating hearing problems, and brain disease in the aging and elderly. Members of Dr. Johnsrude's lab employ methods such as brain imaging and recording electrical brain activity in their research.

Dr. Ingrid Johnsrude is a Western Research Chair in Cognitive Neuroscience at Western University, and holds a joint appointment in the Department of Psychology and School of Communication Sciences and Disorders. Her research interests include the neural basis of speech understanding, how people understand speech in challenging listening situations, diagnosing and treating hearing problems, and brain disease in the aging and elderly. Members of Dr. Johnsrude's lab employ methods such as brain imaging and recording electrical brain activity in their research.

Research

We encounter speech in a variety of settings and clarity, and our ability to extract meaning from speech differs based on numerous factors. Some of the topics we have explored in the CoNCH Lab (Cognitive Neuroscience of Communication and Hearing) include what types of information we use to aid our understanding of speech, how we use this information, and the effects of a listener’s cognitive state on their ability to understand speech.

Study

We are often exposed to speech in a variety of settings, some noisy (eg. Talking to your friend in a busy mall) and some not so noisy (eg. Speaking to the same friend at your home). Although the physical properties of the speech may be similar in these settings, our ability to comprehend the meaning of the speech, also known as the intelligibility of speech, differs.

An fMRI study by Conor Wild (who was a successful PhD candidate in the CoNCH lab) observed that attention enhances the processing of degraded (i.e. less intelligible) speech. In the study, participants were recorded in an MR scanner and presented with 4 types of speech ranging from very intelligible to not-at-all intelligible. At the same time, they were instructed to attend to either the speech stimulus, a visual distractor task, or an auditory distractor task. Following the scanner portion of the experiment, participants were presented with a surprise sentence recognition task and asked to indicate if they recalled hearing the sentences while they were in the scanner.

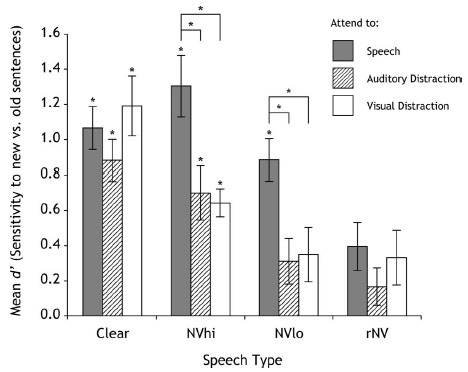

Sentence recognition data (see bar graph) indicated that when participants attended to degraded-but-intelligible speech (NVhi and NVlo), they were able to better remember the sentence than if they directed their attention to the distractor tasks.

In contrast, participants’ attentional state (i.e. whether they were attending to the speech stimulus or a distractor task) did not affect the recognition of clear speech. Taken together, the behavioural results indicate that the way we process degraded speech appears to be modulated by our attentional state.

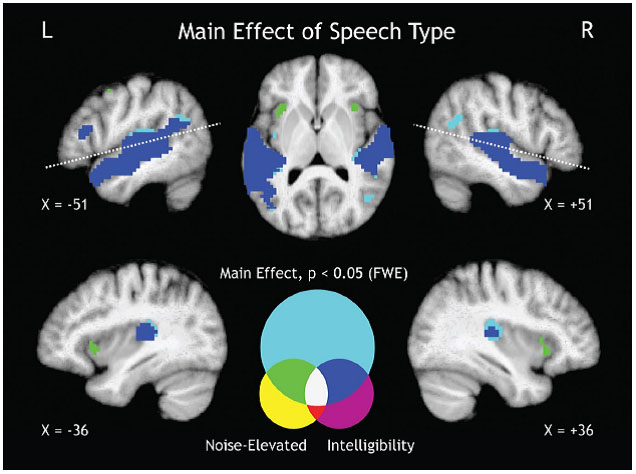

BOLD results indicated that bilateral activation along the superior temporal sulcus (STS) was correlated with speech intelligibility (see the blue voxels in the Main Effect of Speech figure). Speech processing is thought to follow a hierarchical process, where processing extends both anteriorly and posteriorly along the STS from early auditory sensory regions (Peelle, Johnsrude, & Davis, 2010).

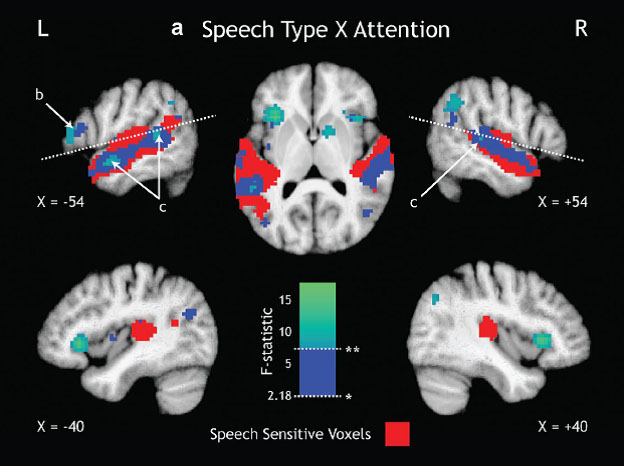

When attending to degraded speech, participants appeared to engage more higher-order mechanisms to fully process the speech, beyond those recruited for processing clear speech. Significant BOLD responses were observed in frontal regions when attending to degraded speech (see the turquoise/green voxels in the Speech Type x Attention figure, and the green voxels in the Main Effect of Speech Type figure).

We believe these results are indicative of effortful listening; the recruitment of higher-order cognitive processes to improve processing of less intelligible speech. These higher-order cognitive processing appears to be restricted to the left inferior frontal gyrus (LIFG), but the nature of the recruited processing is still debated. The LIFG may perform a working memory type process, where it holds the speech stimulus in memory while it extracts the semantic information. It follows that for less intelligible speech, the stimulus would need to be held in memory longer to account for the greater difficulty of extracting meaning from noisier information.

Current work in the CoNCH Lab is investigating the effects of working memory load and attentional load of a listener on their effortful listening ability